Monitoring and Performance

Applications that use LLMs have some challenges that are well known and understood: LLMs are slow, unreliable and expensive.

These applications also have some challenges that most developers have encountered much less often: LLMs are fickle and non-deterministic. Subtle changes in a prompt can completely change a model's performance, and there's no EXPLAIN query you can run to understand why.

Danger

From a software engineers point of view, you can think of LLMs as the worst database you've ever heard of, but worse.

If LLMs weren't so bloody useful, we'd never touch them.

To build successful applications with LLMs, we need new tools to understand both model performance, and the behavior of applications that rely on them.

LLM Observability tools that just let you understand how your model is performing are useless: making API calls to an LLM is easy, it's building that into an application that's hard.

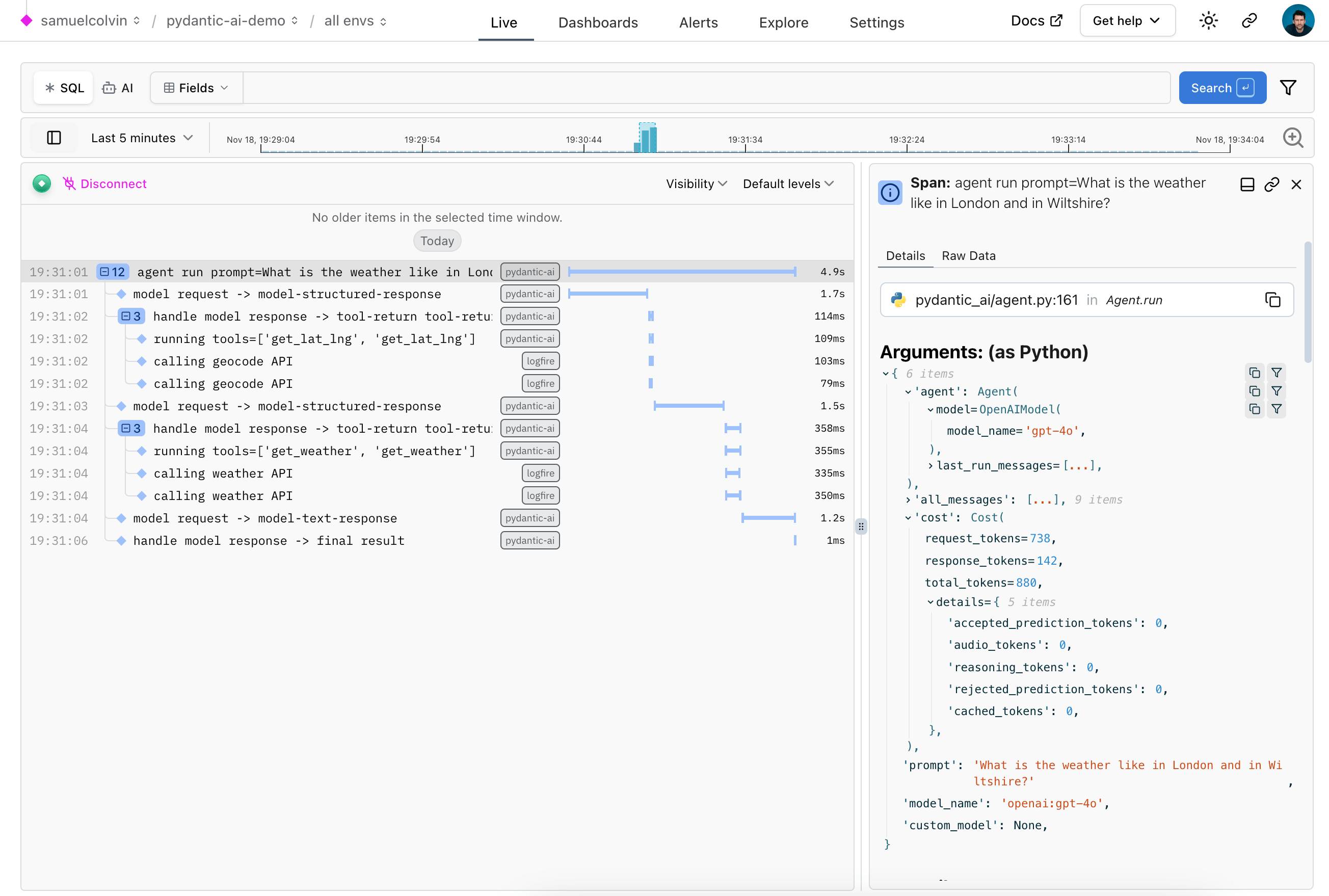

Pydantic Logfire

Pydantic Logfire is an observability platform from the developers of Pydantic and PydanticAI, that aims to let you understand your entire application: Gen AI, classic predictive AI, HTTP traffic, database queries and everything else a modern application needs.

Pydantic Logfire is a commercial product

Logfire is a commercially supported, hosted platform with an extremely generous and perpetual free tier. You can sign up and start using Logfire in a couple of minutes.

PydanticAI has built-in (but optional) support for Logfire via the logfire-api no-op package.

That means if the logfire package is installed and configured, detailed information about agent runs is sent to Logfire. But if the logfire package is not installed, there's virtually no overhead and nothing is sent.

Here's an example showing details of running the Weather Agent in Logfire:

Integrating Logfire

TODO

Debugging

TODO

Monitoring Performance

TODO